Part 1: Inverting the Generator

In this section, we invert the generator by solving a nonconvex optimization problem in the latent space of a pretrained StyleGAN model using the w+ representation. The following results are obtained by applying different combinations of loss functions during inversion, including an Lp (L1) loss that enforces pixel level similarity, a perceptual loss that preserves high level features from a pretrained network, and an L2 regularization term on the latent update (delta) to constrain the optimization. The following outputs below show how each combination impacts the reconstruction quality, highlighting improvements in image detail and background fidelity when using the perceptual loss and regularization .

1. various combinations of the losses including Lp loss, Preceptual loss and/or regularization loss that penalizes L2 norm of delta:

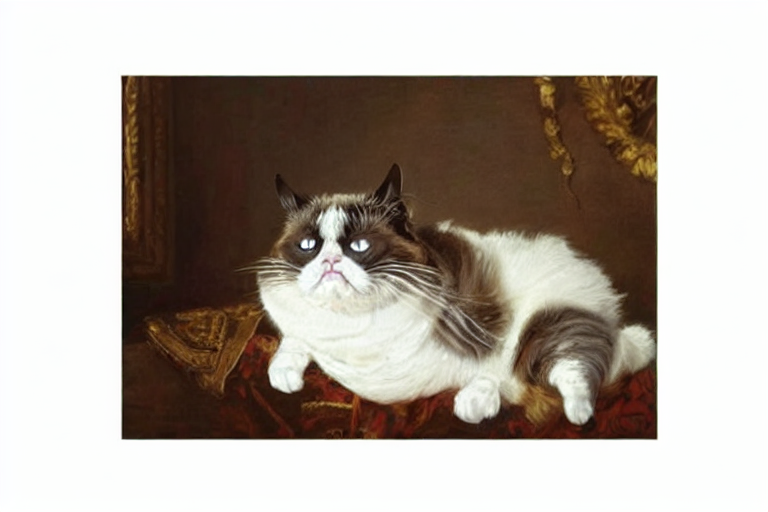

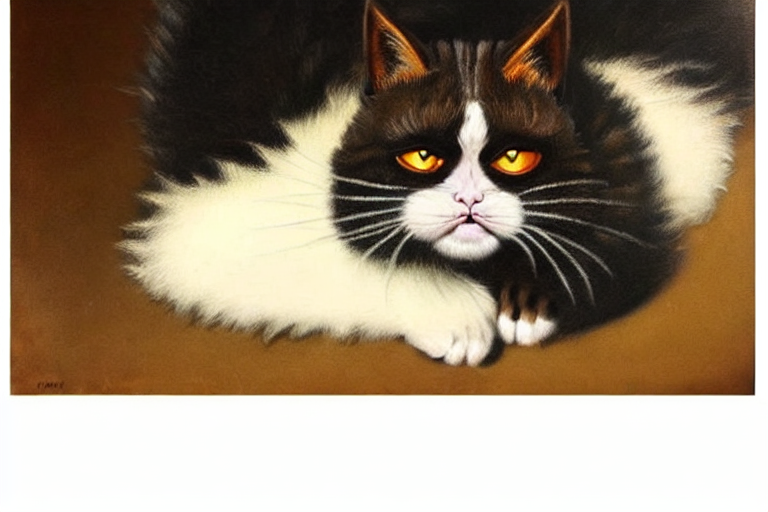

Original Image

L1 pixel loss weight 0

perc loss weight 0.01

Regularization Loss Weight 0.001

L1 pixel loss weight: 0

perc loss weight: 0

Regularization Loss Weight: 0.001

L1 pixel loss weight: 1

perc loss weight: 1

Regularization Loss Weight: 0.1

L1 pixel loss weight: 10

perc loss weight: 1

Regularization Loss Weight: 0.1

L1 pixel loss weight: 10

perc loss weight: 0

Regularization Loss Weight: 0

L1 pixel loss weight: 0

perc loss weight: 0

Regularization Loss Weight: 0.1

L1 pixel loss weight: 0.1

perc loss weight: 0.01

Regularization Loss Weight: 0.001

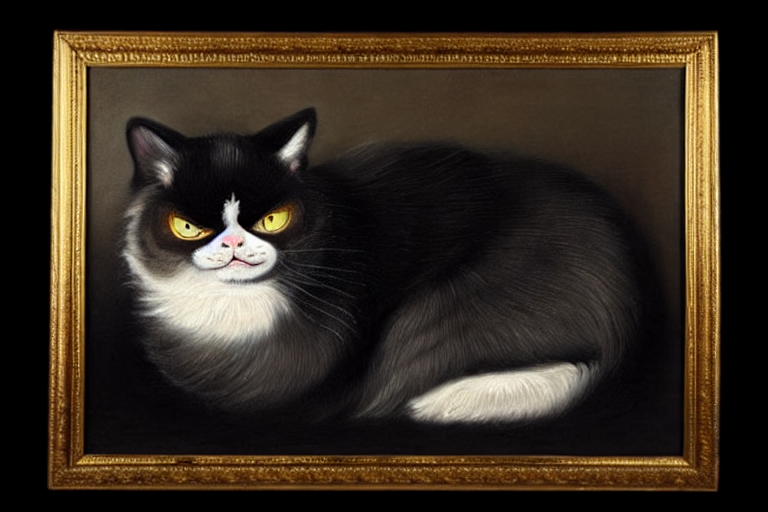

2. different generative models including vanilla GAN, StyleGAN:

The following results are obtained by optimizing a combination of L1 and perceptual losses over 1000 iterations on the z latent space. All experiments are run on A100 GPU. Our experiments demonstrate that although the vanilla GAN is computationally efficient (~40s) due to its streamlined architecture, its reconstructions are significantly less detailed and realistic compared to the higher fidelity outputs generated by StyleGAN (~47s).

Original Image

Vanilla GAN

StyleGAN

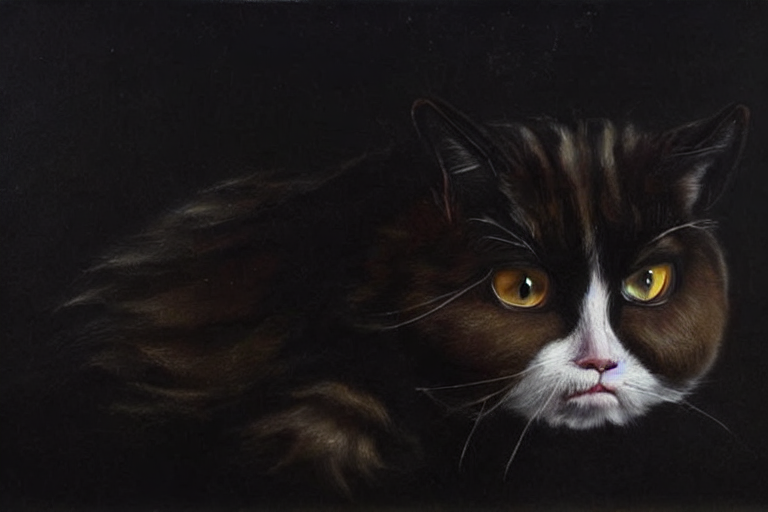

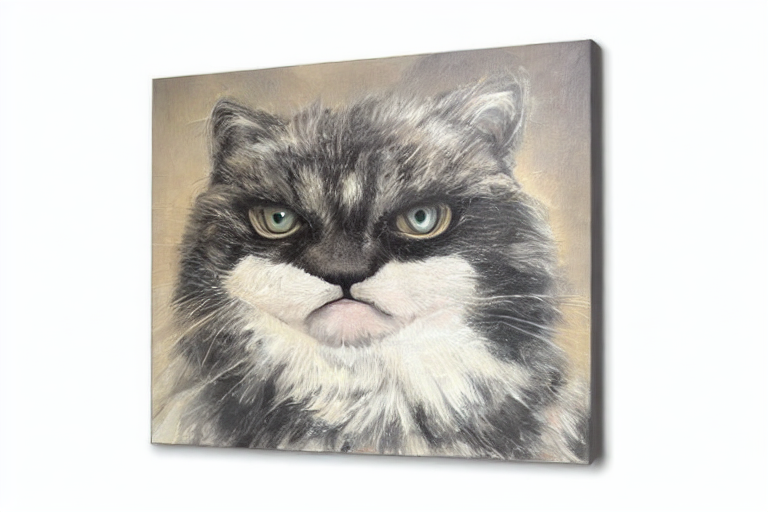

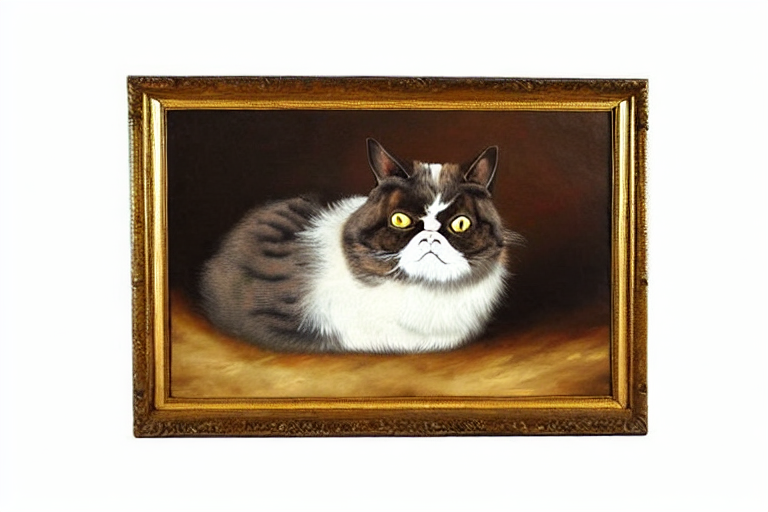

3. different latent space (latent code in z space, w space, and w+ space):

The following results are obtained by using different latent spaces on the StyleGAN model, while optimizing a combination of L1 and perceptual losses over 1000 iterations. All experiments are run on A100 GPU, each took around (~47s).

4. Give comments on why the various outputs look how they do. Which combination gives you the best result and how fast your method performs:

- When using the z latent space for StyleGAN, the optimization becomes particularly challenging because gradients must pass through the additional mapping network, often leading to reconstructions that remain very close to the initial state rather than converging to the input.

- Both w and w+ latent spaces provide improved reconstruction quality, the w+ space delivers greater detail and background fidelity owing to its increased expressiveness and flexibility.

- experiments show that vanilla GAN inversions take about 40 seconds per image, while inversions based on StyleGAN require roughly 47 seconds per image.

- The results indicate that GAN outputs tend to be unstable and that using perceptual loss is essential for generating images that closely match the reference image, whereas regularizing the latent update (delta) has little effect.

- The best performance in our experiments is achieved using StyleGAN with either the w or w+ latent spaces, as these methods capture the input image more accurately than using the z space, and StyleGAN outperforms vanilla GAN for inversion tasks.

- The best result is obtained by using the StyleGAN with w+ space, Lp loss weight 10, perceptual loss weight 0.01, and regularization loss weight 0.0. The method performs 1000 iterations within 47s.

.png)

.png)

.png)

.png)